This New AI Voice Scam Could Fool Anyone—Here’s How to Beat It

Criminals are using artificial intelligence technology in a scary new AI scam, but knowing the red flags can help you avoid becoming their next victim

Picture this: You answer a phone call one day and hear a voice that sounds like your child. They tell you that they have been kidnapped and need cash for a ransom right away. You scramble to help—only to realize that the voice on the other end of the line isn’t your child but rather part of a sophisticated, terrifying new AI scam phone call.

That’s what happened to Arizona mother Jennifer DeStefano, who recently testified about her experience to the Senate. And unfortunately, her story is all too common. As artificial intelligence (AI) technology becomes cheaper and more accessible, criminals are frequently using it to impersonate the voices of our friends and loved ones to trick us into sending them money. In fact, imposter scams like these have stolen up to $2.6 billion from Americans in the past year, according to the Federal Trade Commission.

The good news? You can beat scammers at their own game. We spoke with cybersecurity experts to learn how these AI scam calls work, why they’re so hard to spot, how to avoid them, what to do if you become a target, the future of AI scams and more.

Get Reader’s Digest’s Read Up newsletter for more tech news, humor, cleaning, travel and fun facts all week long.

What is the new AI scam?

A clever scammer with a good AI program doesn’t need much more than a few-second recording of a loved one’s voice to be able to clone the person’s voice and apply their own script. From there, they can play the audio over the phone to convince their victims that someone they love is in a desperate situation and needs money immediately.

These aren’t your typical four-word phone scams or Google Voice scams. They’re even more advanced.

In one of the most common examples, parents or grandparents receive a call from their children or grandchildren claiming they need money for ransom or bail, like the AI kidnapping scam DeStefano encountered. “We have seen parents targeted and extorted for money out of fear that their child is in danger,” says Nico Dekens, director of intelligence and collection innovation at ShadowDragon.

Eva Velasquez, president and CEO of the Identity Theft Resource Center, says that the center also receives reports of AI scam calls that convince victims their relative needs money to pay for damages from a car accident or other incident. Other scams include using a manager or executive’s voice in a voicemail instructing someone to pay a fake invoice, as well as calls that sound like law enforcement or government officials demanding the targeted individual share sensitive information over the phone.

How does this AI scam work?

It may take a few steps to pull together an AI scam, but the tech speeds up the process to such an extent that these cons are worryingly easy to produce compared with voice scams of the past. In a nutshell, this fraud follows the steps below.

Step 1: Collect the recording

To carry out an AI scam call, criminals first must find a five- to ten-second audio recording of a loved one’s voice, such as a clip from YouTube or a post on Facebook or Instagram. Then they feed it to an artificial intelligence tool that learns the person’s voice patterns, pitch and tone—and, crucially, simulates their voice.

These tools are widely available and cheap or even free to use, which makes them even more dangerous, according to experts. For example, generative AI models like ChatGPT or Microsoft’s VALL-E need to listen to only three seconds of an audio “training” clip of someone speaking to create a replica of their voice. “As you can imagine, this gives a new superpower to scammers, and they started to take advantage of that,” says Aleksander Madry, a researcher at MIT’s Computer Science and Artificial Intelligence Laboratory.

Step 2: Feed the AI a script

Once the AI software learns the person’s voice, con artists can tell it to create an audio file of that cloned voice saying anything they want. Their next step is to call you and play the AI-generated clip (also called a deepfake). The calls might use a local area code to convince you to answer the phone, but don’t be fooled. Remember, the bad guys are capable of spoofing their phone numbers. Many phone-based fraud scams originate from countries with large call-center operations, like India, the Philippines or even Russia, according to Velasquez.

Step 3: Set the trap

The scammer will tell you that your loved one is in danger and that you must send money immediately in an untraceable way, such as with cash, via a wire transfer or using gift cards. Although this is a telltale sign of wire fraud or a gift card scam, most victims will panic and agree to send the money. “The nature of these scams plays off of fear, so in the moment of panic these scams create for their victims, it is also emotional and challenging to take the extra moment to consider that it might not be real,” Dekens says.

Scammers are also relying on the element of surprise, according to Karim Hijazi, the managing director of SCP & CO, a private investment firm focused on emerging technology platforms. “The scammers rely on an adequate level of surprise in order to catch the called target off guard,” he says. “Presently, this tactic is not well known, so most people are easily tricked into believing they are indeed speaking to their loved one, boss, co-worker or law enforcement professional.”

How has AI made scams easier to run—and harder to spot?

Imposter scams have been around for years, but artificial intelligence has made them more sophisticated and convincing. “AI did not change much in terms of why people do scams—it just provided a new avenue to execute them,” Madry says. “Be it blackmail, scam or misinformation or disinformation, all now can be much cheaper to execute and more persuasive.”

While AI has been around for decades for both criminal and everyday use—think: AI password cracking and AI assistants like Alexa and Siri—it was expensive and required a massive amount of computing power to run. As a result, shady characters needed a lot of time and expertise with specialized software to impersonate someone’s voice using AI.

“Now, all of this is available for anyone who just spends some time watching tutorials on YouTube or reading how-to docs and is willing to tinker a bit with the AI systems they can download from the internet,” says Madry.

On top of that, Velasquez notes that previous imposter phone scams used to blame a poor connection or bad accident to explain why their voice sounded different. But today’s technology “has become so good that it is almost impossible for the human ear to be able to tell that the voice on the other end of the phone is not the person it purports to be,” says Alex Hamerstone, a director with the security-consulting firm TrustedSec.

How can you avoid AI scams?

They may be quicker to create than past imposter scams, but AI scam calls are still labor-intensive for criminals, so your odds of being targeted are low, according to Velasquez. Most con artists want to use attacks that they can automate and repeat over and over again, and “you can’t do that with voice clones because it requires the victim to know and recognize a voice, not just some random voice,” she says.

The problem is that these attacks will continue to increase as the technology improves, making it easier to locate targets and clone voices. And there’s another reason now is the best time for criminals to run these cons: “As these kinds of capabilities are new to our society, we have not yet developed the right instincts and precautions to not fully trust what is being said via phone, [especially] if we are convinced that this is a voice of a person we trust,” Madry says.

That’s why it’s important to take proper precautions to boost your online security and avoid being targeted in the first place. Here are a few suggestions from the experts:

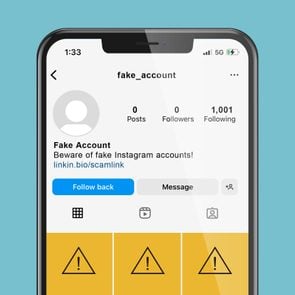

Make your social media accounts private

Before sharing audio and video clips of yourself on Facebook, Instagram, YouTube or other social media accounts, Velasquez recommends limiting your privacy settings (including who can see your posts) to people you know and trust. Users who keep their posts open to everyone should review and remove audio and video recordings of themselves and loved ones from social media platforms to thwart scammers who may seek to capture their voices, she says.

Use multifactor authentication

Setting up multifactor authentication for your online accounts can also make it more difficult for fraudsters to access them. This system requires you to enter a combination of credentials that verifies your identity—such as a single-use, time-sensitive code you receive on your phone via text—in addition to a username and password to log in to your account.

If you use biometric tools for verification, opt for ones that use your face or fingerprint rather than your voice to avoid providing criminals with the resources to create a deepfake.

Assign a secret phrase

Hijazi suggests coming up with a secret phrase or password that you can exchange with your loved one ahead of time. That way, if you receive a call alleging that they have been kidnapped or need money right away, you can authenticate that you are indeed speaking to the real person. “This does take some advance planning, but it’s a free and effective proactive measure,” he says.

Erase your digital footprint

Last, you can avoid being targeted by these scams to begin with by disappearing from the internet, as best as anyone is able to these days. Scammers often rely on the trail of breadcrumbs you leave about yourself online, from your pet’s name to your high school mascot, to learn about your life and build a scam around it.

“There are vast amounts of information freely and publicly available about almost every one of us,” Hamerstone says. “It is very simple to find out people’s family members, associations and employers, and these are all things that a scammer can use to create a convincing scam.”

The solution is simple, according to Hamerstone. “Limiting the amount of information we share about ourselves publicly is one of the few ways that we can lower the risk of these types of scams,” he says.

Online tools like DeleteMe can automatically remove your name, address and other personal details from data brokers, which will make it more difficult for scammers to target you. Google is even working on a new Results About You tool that’ll alert you when your personal info appears in its search results and will make it easy to request their removal.

What should you do if you receive an AI scam call?

Received a suspicious phone call? If you are on the phone with a loved one who is demanding money, don’t panic. “It can be scary and disturbing to hear a loved one in distress, but the most important thing to remember is not to overreact,” Velasquez says.

Instead, experts recommend taking these steps before agreeing to send money to someone over the phone:

- Call your loved one directly using a trusted phone number.

- If you can’t reach them, try to contact them through a family member, friend or colleague.

- Ask the caller to verify a detail that only they would know, such as the secret phrase mentioned above.

- Alert law enforcement. They can help you verify whether the call you received is legitimate or a scam, Dekens says.

- Listen for any audio abnormalities, such as unusual voice modulation or synthetic-sounding voices, to identify a scammer. “Deepfake audio can lack natural intonation or exhibit glitches, like sounding angry or sad,” Dekens says. Hijazi also points out that this technology is not “conversational” yet and will likely fail to keep up if you keep asking questions.

- If you determine that the call is a scam, write down or screenshot the phone number that called you.

- Block the number on your phone and place them on your do-not-call list to avoid receiving a call from them again.

- Dekens suggests putting the scam caller on speaker on your phone and recording the audio with a secondary phone. “It is a good way to preserve evidence,” he says.

- Report the call to your mobile phone carrier so the company can take appropriate action.

While AI scams are becoming more common, today’s AI technology isn’t always a bad thing. These funny AI mistakes and future robots that use AI show the positive (and silly) side of these tools.

About the experts

- Nico Dekens is the director of intelligence and collection innovation at ShadowDragon. He has more than 20 years of experience as an intelligence analyst with Dutch law enforcement.

- Eva Velasquez is the president and CEO of the Identity Theft Resource Center and the former vice president of operations for the San Diego Better Business Bureau.

- Aleksander Madry, PhD, is a faculty member at the Massachusetts Institute of Technology (MIT) and researcher at MIT’s Computer Science and Artificial Intelligence Laboratory. He’s currently on leave and working as a researcher with OpenAI.

- Karim Hijazi is the managing director of SCP & CO, a private investment firm focused on emerging technology platforms.

- Alex Hamerstone is the advisory solutions director for TrustedSec, a cybersecurity company.

Sources:

- Federal Trade Commission: “New FTC Data Show Consumers Reported Losing Nearly $8.8 Billion to Scams in 2022”

- U.S. Senate Committee on the Judiciary: “Subcommittee on Human Rights and the Law: Artificial Intelligence and Human Rights”